Oxen.ai Blog

Welcome to the Oxen.ai blog 🐂

The team at Oxen.ai is dedicated to helping AI practictioners go from research to production. To help enable this, we host a research paper club on Fridays called ArXiv Dives, where we go over state of the art research and how you can apply it to your own work.

Take a look at our Arxiv Dives, Practical ML Dives as well as a treasure trove of content on how to go from raw datasets to production ready AI/ML systems. We cover everything from prompt engineering, fine-tuning, computer vision, natural language understanding, generative ai, data engineering, to best practices when versioning your data. So, dive in and explore – we're excited to share our journey and learnings with you 🚀

0:00 /0:45 1× An AI generated goat rapping alongside Ludacris in Frank's RedHot's "Eat The GOAT" Super Bowl ad. The goat was fully genera...

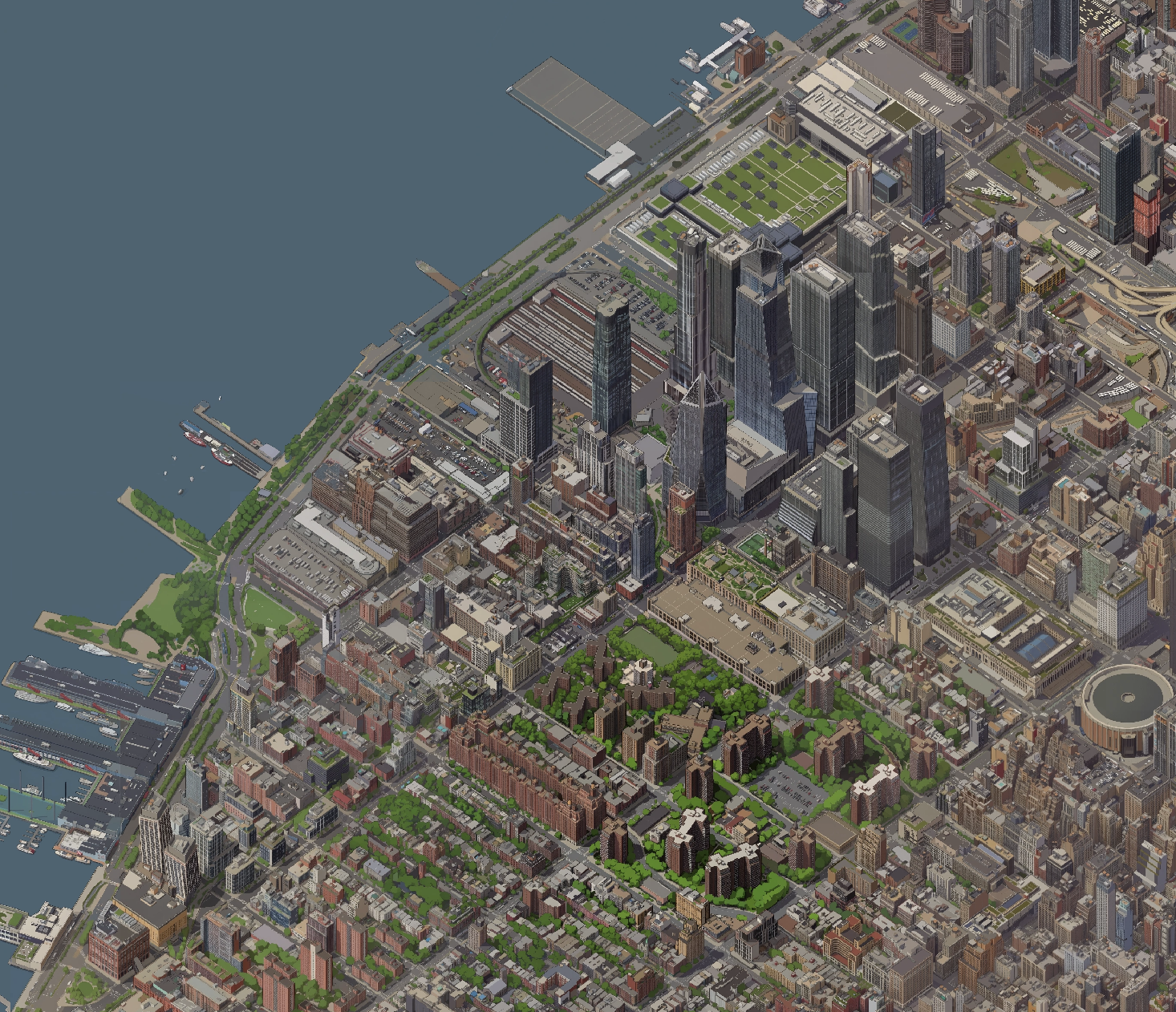

A giant isometric pixel-art map of New York City, inspired by SimCity 2000 and Rollercoaster Tycoon. Andy Coenen fine-tuned an image model on Oxen with just 40 training examples to...

0:00 /0:30 1× A fully AI generated commercial for the Canadian telecom giant Bell. Made in collaboration with KnuckleheadTV and amazing ...

Welcome to this week's Oxen moooodel report. We know the AI space moves like crazy. There's a new model, paper, podcast, or hot take every single day. To help y'all keep up, we're ...

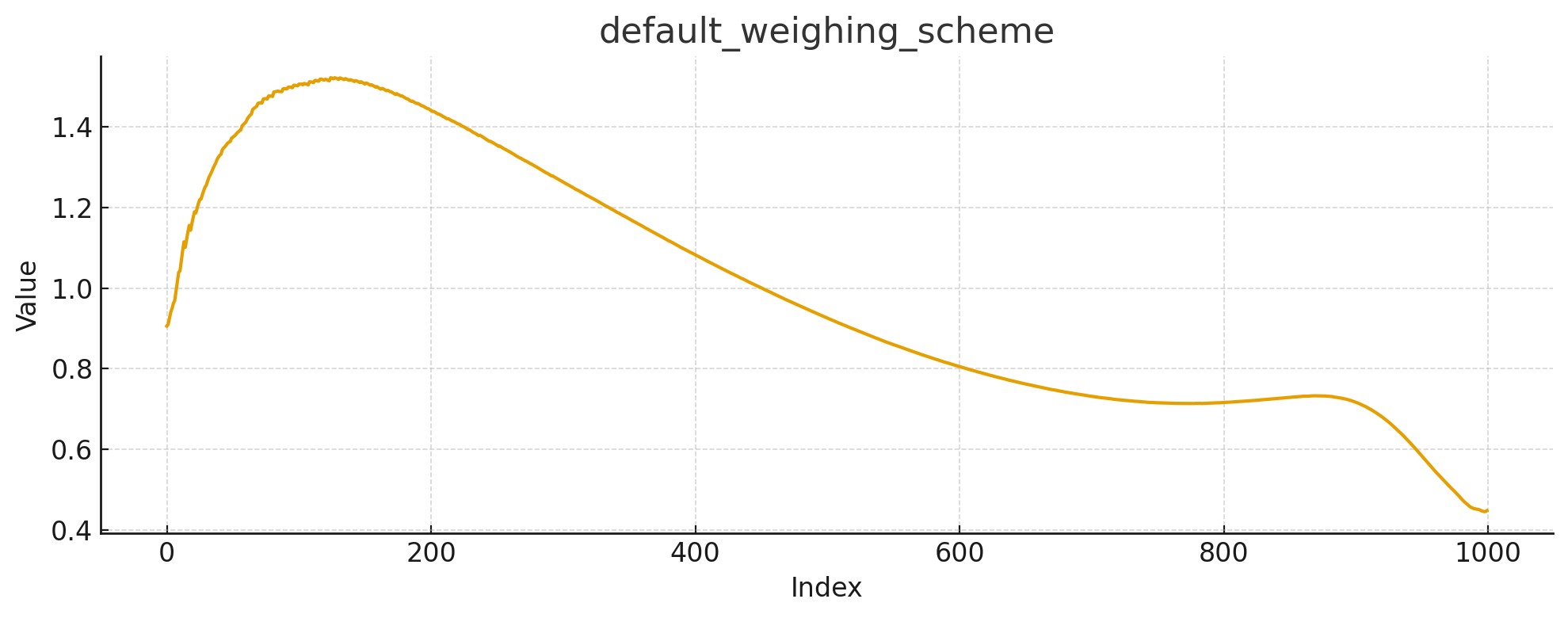

Can a $1 fine-tune beat a state-of-the-art closed-source model? ModelAccuracyTime (98 samples)Cost/RunBase Qwen3-VL-8B54.1%~10 sec$0.003Gemini 3 Flash82.7%2 min 46 sec$0.016FT Q...

LTX-2 is a video generation model, that not only can generation video frames, but audio as well. This model is fully open source, meaning the weights and the code are available for...

Imagine you are shooting a film and you realize that you have the actor wearing the wrong jacket in a scene. Do you bring the whole cast back in to re-shoot? Depending on the actor...

At Oxen.ai, we think a lot about what it takes to run high-quality inference at scale. It’s one thing to produce a handful of impressive results with a cutting-edge image editing m...

Fine-tuning Diffusion Models such as Stable Diffusion, FLUX.1-dev, or Qwen-Image can give you a lot of bang for your buck. Base models may not be trained on a certain concept or st...

Welcome back to Fine-Tuning Friday, where each week we try to put some models to the test and see if fine-tuning an open-source model can outperform whatever state of the art (SOTA...