Generative Deep Learning Book - Preface

Join the Oxen.ai "Nerd Herd"

Every Friday at Oxen.ai we host a public paper club called "Arxiv Dives" to make us smarter Oxen 🐂 🧠. These are the notes from the group session for reference. If you would like to join us live, sign up here.

The first book we are going through is Generative Deep Learning: Teaching Machines To Paint, Write, Compose, and Play.

If some of the sentences or thoughts below feel incomplete, that is because they were covered in more depth in a live walk through, and this is meant to be more of a reference for later. Please purchase the full book, or join live to get the full details.

What is Generative Modeling?

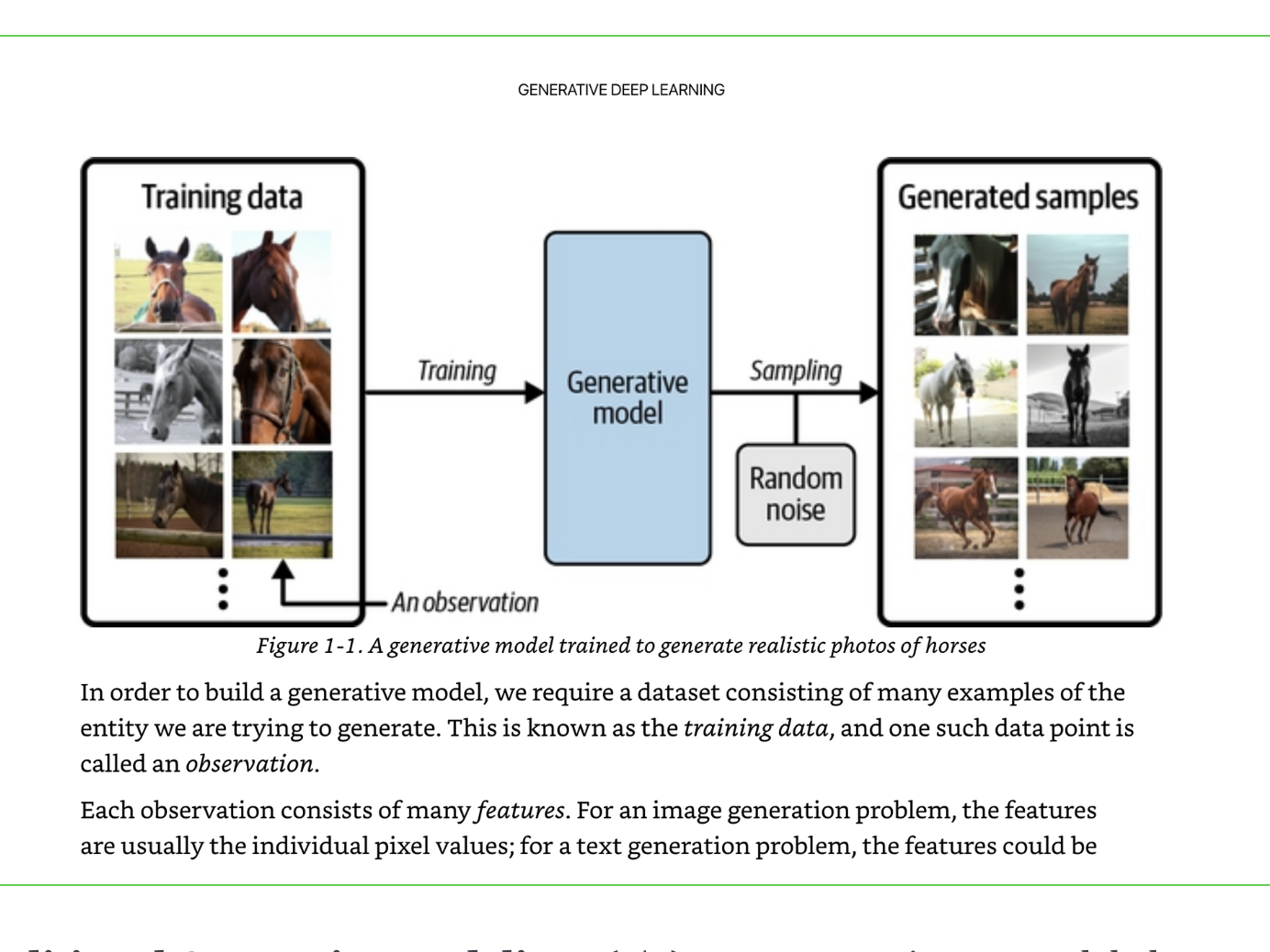

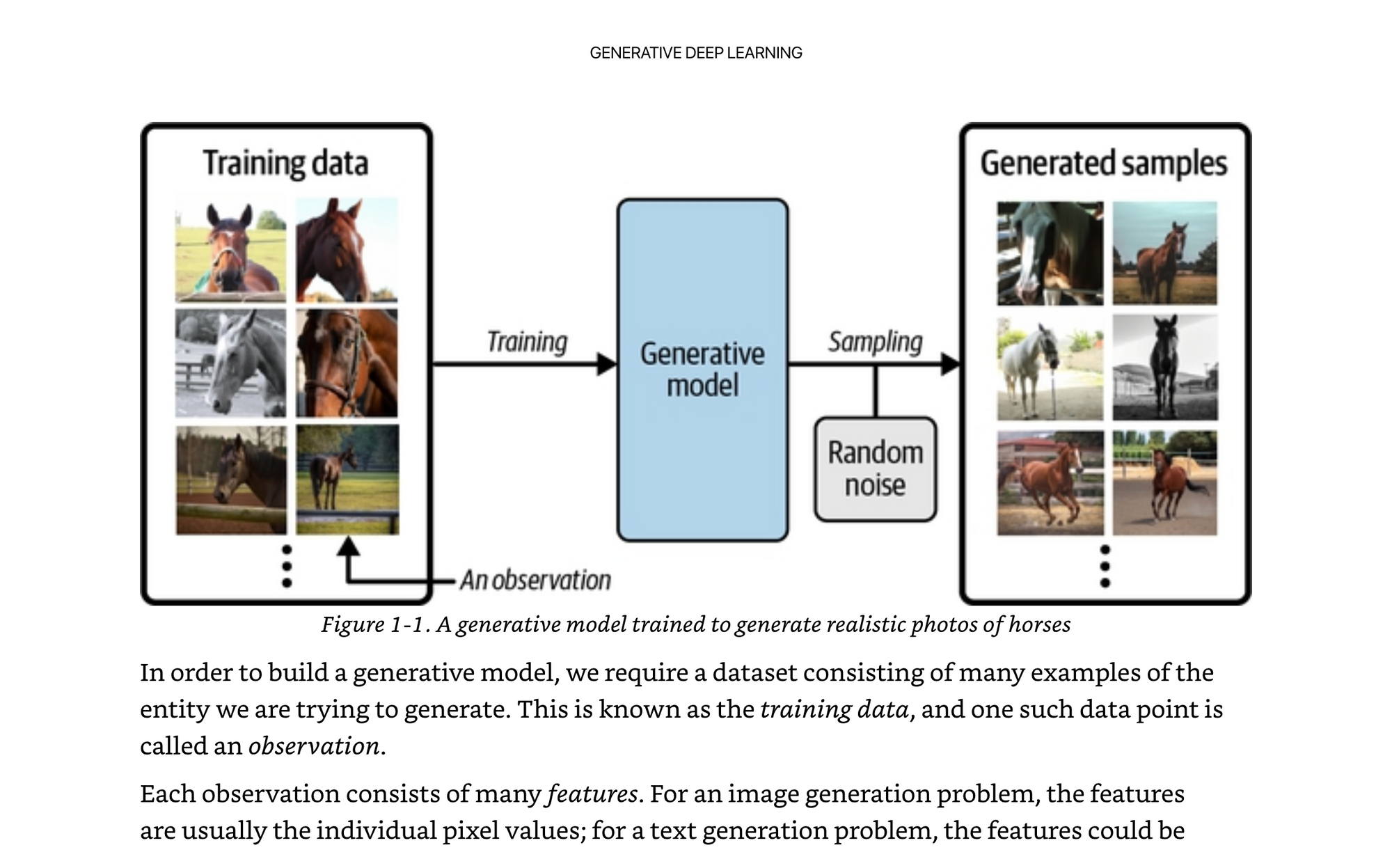

Generative modeling is a branch of machine learning that involves training a model to produce new data that is similar to a given dataset.

Generative modeling is great for capturing the nuances of real life, if you can generate it in all it’s forms, you understand some basic fundamental properties about it, that can generalize to other situations.

Analogies To The Brain / Human Understanding

Think about how we navigate the world, we as humans can generate different scenarios in our head, and act them out without actually doing them. This is super useful in robotics, and other AI that have to act in the real world.

Current neuroscientific theory suggests that our perception of reality is not a highly complex discriminative model operating on our sensory input to produce predictions of what we are experiencing, but is instead a generative model that is trained from birth to produce simulations of our surroundings that accurately match the future.

Think about our brains…they are locked in this black box called our skull. They actually to not have any access to the physical world. All they have is electrical and chemical signals that get fed into them and they have to encode. Whether it is photons turned into electrical signals from your eyes, or your hands on the keyboard making contact with your skin, your brain never actually sees or touches these things.

What it does to is take all of these electrical signals, and it can synthesize them, abstract them, store them away, and recognize patterns in them. There are many studies in which someone loses one sense, and the brain learn to use the capacity for that sense to be replaced with another. Blind people with sensors on their tongues, or vibrations on their skin from input patterns can learn to see again. Same with deaf people, etc. The brain is just a chemical, electrical signal processor, sitting in the dark black box. It doesn’t care how it gets its inputs, it can learn patterns and associations to figure out what is going on outside the black box.

Some theories even suggest that the output from this generative model is what we directly perceive as reality.

Discriminative vs Generative Modeling

Discriminative Modeling p(y|x) what is the probability of a class given a data point? For example: is the painting a Van Gogh?

Generative Modeling p(x) what is the probability that this is a valid data point? Doesn’t necessarily require labels, which is one reason it is so powerful.

Conditional Generative Modeling p(x|y), generate X given some label or labels. For example: y could be a feature “has mustache” and X could be a photo of a man with a mustache. Below are examples from the CelebA Dataset that could be used to conditionally generate human faces.

A generative model must also be probabilistic rather than deterministic, because we want to be able to sample many different variations of the output, rather than get the same output every time. If our model is merely a fixed calculation, such as taking the average value of each pixel in the training dataset, it is not generative. A generative model must include a random component that influences the individual samples generated by the model.

“An important point to note is that even if we were able to build a perfect discriminative model to identify Van Gogh paintings, it would still have no idea how to create a painting that looks like a Van Gogh. It can only output probabilities against existing images, as this is what it has been trained to do. We would instead need to train a generative model and sample from this model to generate images that have a high chance of belonging to the original training dataset.”

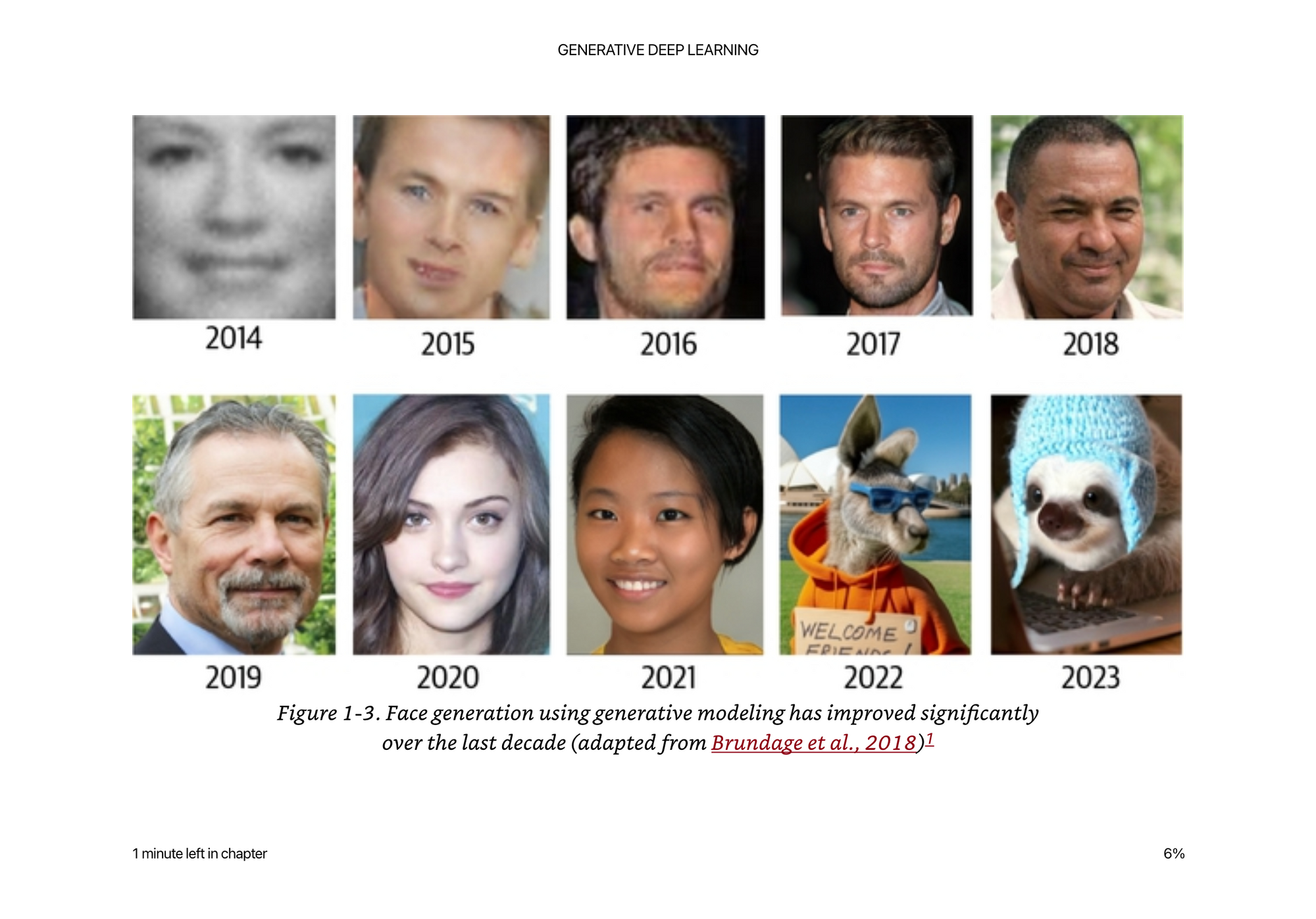

Generative modeling of images has been getting better and better year over year. I think videos are next, videos have an interesting property of having to predict the future from the present, and probably need a decent amount of guidance or might go off the rails imo. If you are holding a pen straight up and down, and let go of it, there is a possibility that it goes in many different directions, which direction is correct?

Generative models have been progressively been getting more impressive year by year.

Use cases for generative imagery

- Game industry assets

- Cinematography

- Iconography

- Lottie animations

- Web graphics

- Logos

- Generative web site

Use cases for generative text

- Copy writing

- Brain storming

- Encoding Natural language inputs into other generative systems